Deep Inverse Rendering for High-resolution SVBRDF Estimation from an Arbitrary Number of Images

- 1Tsinghua University

- 2Microsof Research Asia

- 3University of Science and Technology of China

- 4 College of William & Mary

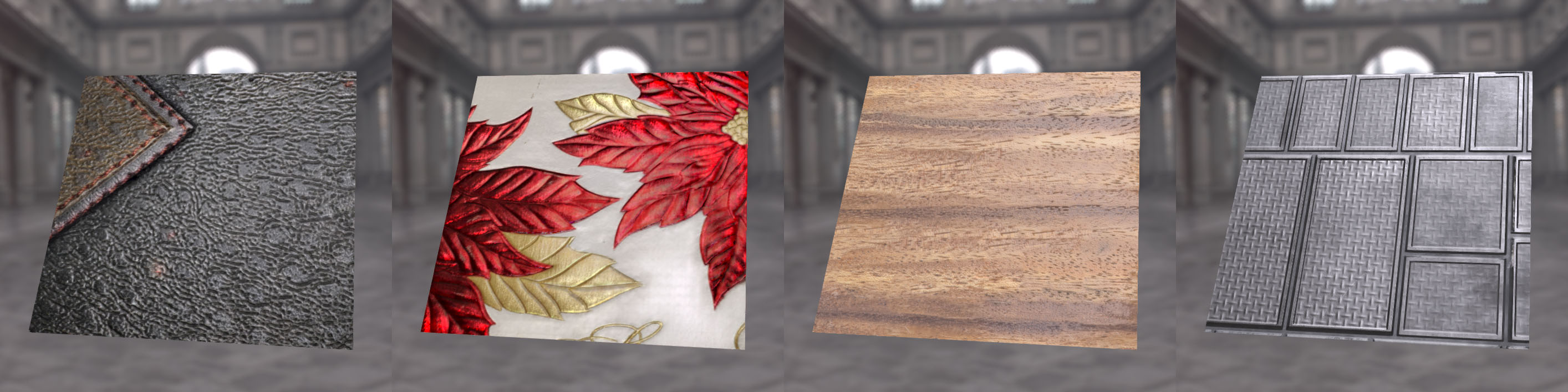

Visualizations under natural lighting of four captured 1 k resolution SVBRDFs estimated using our deep inverse rendering framework. The leather material (left) is reconstructed from just 2 input photographs captured with a mobile phone camera and flash, while the other materials are recovered from 20 input photographs.

Abstract

In this paper we present a unified deep inverse rendering framework for estimating the spatially-varying appearance properties of a planar exemplar from an arbitrary number of input photographs, ranging from just a single photograph to many photographs. The precision of the estimated appearance scales from plausible when the input photographs fails to capture all the reflectance information, to accurate for large input sets. A key distinguishing feature of our framework is that it directly optimizes for the appearance parameters in a latent embedded space of spatially-varying appearance, such that no handcrafted heuristics are needed to regularize the optimization. This latent embedding is learned through a fully convolutional auto-encoder that has been designed to regularize the optimization. Our framework not only supports an arbitrary number of input photographs, but also at high resolution. We demonstrate and evaluate our deep inverse rendering solution on a wide variety of publicly available datasets.

|

*The first two authors contributed equally to this paper. KeywordsAppearance modeling, Inverse rendering Paper and videoTrained model and code |

AcknowledgementsWe would like to thank the reviewers for their constructive feedback. We also thank Baining Guo for discussions and suggestions. Pieter Peers was partially supported by NSF grant IIS-1350323 and gifts from Google, Activision, and Nvidia. Duan Gao and Kun Xu are supported by the National Natural Science Foundation of China (Project Numbers: 61822204, 61521002). |