Appearance-from-Motion: Recovering Spatially Varying Surface Reflectance under Unknown Lighting

- Yue Dong1

- Guojun Chen2

- Pieter Peers3

- Jiawan Zhang2

- Xin Tong1,2

- 1Microsoft Research

- 2Tianjin University

- 3College of William & Mary

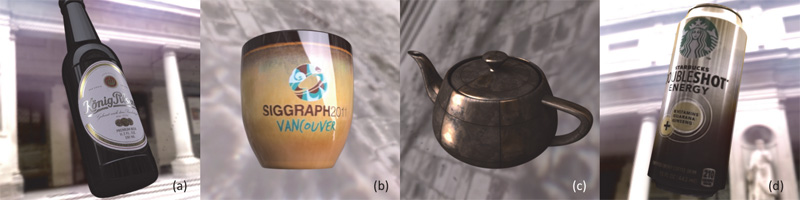

Appearance-from-Motion. Renderings under the “Uffizi Gallery” light probe of surface reflectance recovered under unknown illumination. (a) “beer bottle”, (b) “coffee mug”, (c) “rusted copper”, and (d) “Starbucks can”.

Abstract

We present ``appearance-from-motion'', a novel method for recovering the spatially varying isotropic surface reflectance from a video of a rotating subject, with known geometry, under unknown natural illumination. We formulate the appearance recovery as an iterative process that alternates between estimating surface reflectance and estimating incident lighting. We characterize the surface reflectance by a data-driven microfacet model, and recover the microfacet normal distribution for each surface point separately from temporal changes in the observed radiance. To regularize the recovery of the incident lighting, we rely on the observation that natural lighting is sparse in the gradient domain. Furthermore, we exploit the sparsity of strong edges in the incident lighting to improve the robustness of the surface reflectance estimation. We demonstrate robust recovery of spatially varying isotropic reflectance from captured video as well as an internet video sequence for a wide variety of materials and natural lighting conditions.

KeywordsAppearance Modeling, Unknown Lighting DownloadsDataPart of captured data are processed and converted to renderer friendly format. The data can be accessed here . |

AcknowledgementsWe wish to thank the reviewers for their constuctive feedback. Pieter Peers was partially funded by NSF grants: IIS-1217765, IIS-1350323, and a gift from Google. ErrataThere is one relevant paper [Palma et. al. 2013] should be cited. We would like to fix this missing citation and acknowledge this related work. Gianpaolo Palma, Nicola Desogus, Paolo Cignoni, Roberto Scopigno, Inte. Conf. Digital Heritage 2013, Volume 1, page 31--38 - 2013 |