Classifier Guided Temporal Supersampling for Real-time Rendering

-

Microsof Research Asia

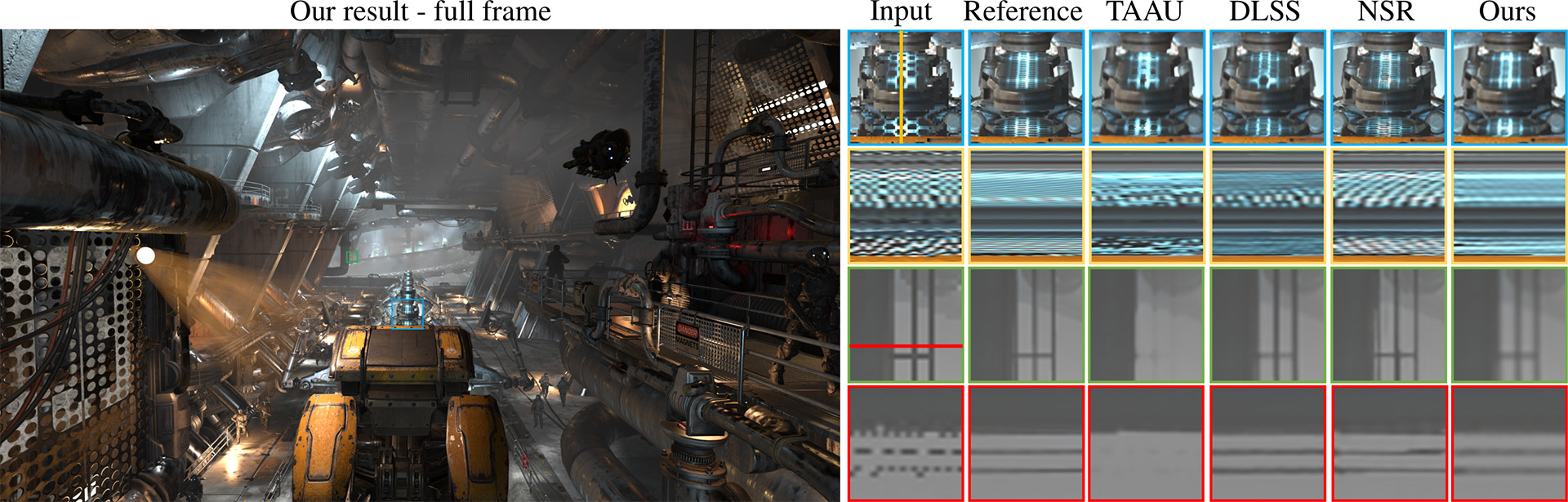

Supersampling result of our method. With a low resolution aliased sample input 1920x1080, our method can generate aliasing free high resolution output 3840x2160 while maintaining temporally stable between frames. Classical methods like TAAU [YLS20] fail to reconstruct fine details in their results (see zoom-in views in the right column, odd-rows), learning based solution [XNC∗20] is slow and exhibits temporal jittering (see temporal profiles in the right column, even-rows). Our method generates results with similar quality to DLSS without requiring dedicated hardware and software.

Abstract

We present a learning based temporal supersampling algorithm for real-time rendering. Different from existing learning-based approaches that adopt an end-to-end training of a 'black-box' neural network, we design a 'white-box' solution that first classifies the pixels into different categories and then generates the supersampling result based on classification. Our key observation is that the core problem in temporal supersampling for rendering is to distinguish the pixels that consist of occlusion, aliasing, or shading changes. Samples from these pixels exhibit similar temporal radiance change but require different composition strategies to produce the correct supersampling result. Based on this observation, our method first classifies the pixels into several classes. Based on the classification results, our method then blends the current frame with the warped last frame via a learned weight map to get the supersampling results. We design compact neural networks for each step and develop dedicated loss functions for pixels belonging to different classes. Compared to existing learning based methods, our classifier-based supersampling scheme takes less computational and memory cost for real-time supersampling and generates visually compelling temporal supersampling results with fewer flickering artifacts. We evaluate the performance and generality of our method on several rendered game sequences and our method can upsample the rendered frames from 1080P to 2160P in just 13.39ms on a single Nvidia 3090GPU.

KeywordsReal-time rendering, Supersampling Paper and video |

AcknowledgementsWe would like to thank the reviewers for their constructive feedback; Chong Zeng, Jilong Xue, Yuqing Xia, Wei Cui and the NNFusion [MXY∗20] team’s support for optimizing the HLSL shader code; Youkang Kong’s help implementing the NSR method [XNC∗20]. We would also like to thank people from the Microsoft Xbox and xCloud team for valuable discussions, including Daniel Kennett, Hoi Vo, Matt Bronder and Andrew Goossen. |