Deep Reflectance Scanning: Recovering Spatially-varying Material Appearance from a Flash-lit Video Sequence

- Wenjie Ye1,2

- Yue Dong2

- Pieter Peers3

- Baining Guo2

- 1Tsinghua University

- 2Microsoft Research Asia

- 3College of William & Mary

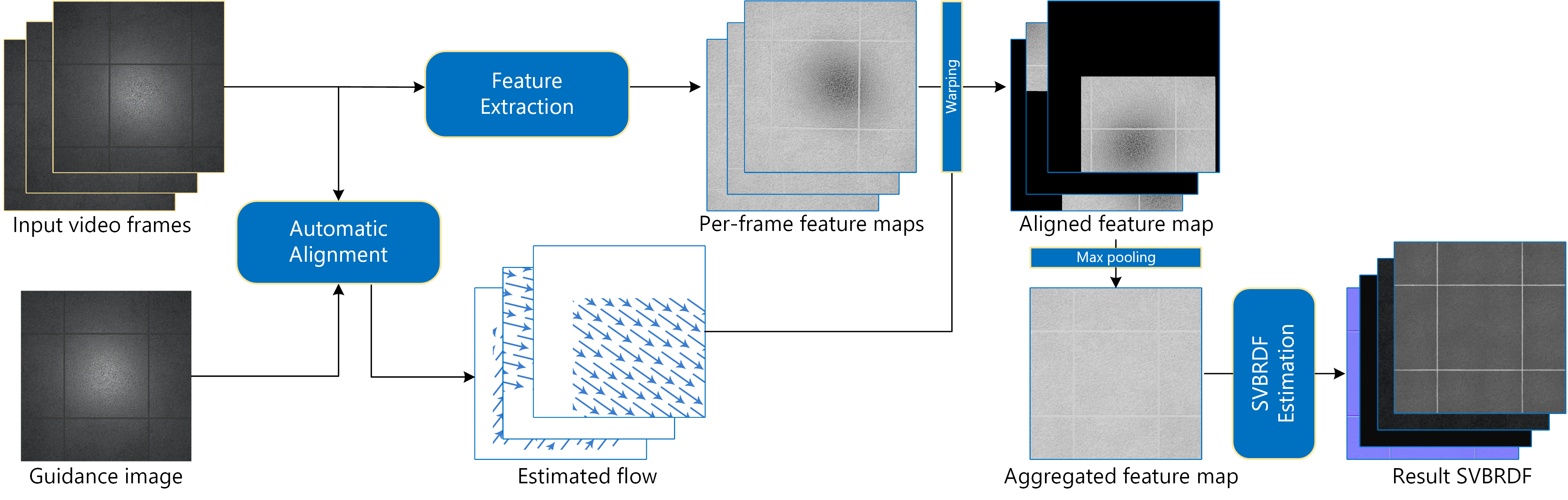

Overview of our method. Given a guidance image and an input video, we first compute a warp function between each input video frames and the guidance image by estimating per-frame optical flow. Next, we extract SVBRDF feature vectors for each input frame and align (warp) the feature maps. Finally, the SVBRDF is reconstructed based on aggregated (max-pooled) feature maps.

Abstract

In this paper we present a novel method for recovering high-resolution spatially-varying isotropic surface reflectance of a planar exemplar from a flash-lit close-up video sequence captured with a regular hand-held mobile phone. We dot not require careful calibration of the camera and lighting parameters, but instead compute a per-pixel flow map using a deep neural network to align the input video frames. For each video frame, we also extract the reflectance parameters, and warp the neural reflectance features directly using the per-pixel flow, and subsequently pool the warped features. Our method facilitates convenient hand-held acquisition of spatially-varying surface reflectance with commodity hardware by non-expert users. Furthermore, our method enables aggregation of reflectance features from surface points visible in only a subset of the captured video frames, enabling the creation of high-resolution reflectance maps that exceed the native camera resolution. We demonstrate and validate our method on a variety of synthetic and real-world spatially-varying materials.

PaperImplementationOur implementation is based on TensorFlow. Please visit the GitHub page to see details. |

Data downloadsUse of the released models and data is restricted to academic research purposes only. AcknowledgementsWe would like to thank the reviewers for their constructive feedback. Pieter Peers was partially supported by NSF grant IIS-1350323 and a gift from Nvidia. |