AppGen: Interactive Material Modeling from a Single Image

- Yue Dong1,2

- Xin Tong2

- Fabio Pellacini3,4

- Baining Guo1,2

- 1Tsinghua University

- 2Microsoft Research Asia

- 3Dartmouth College

- 3Sapienza University of Rome

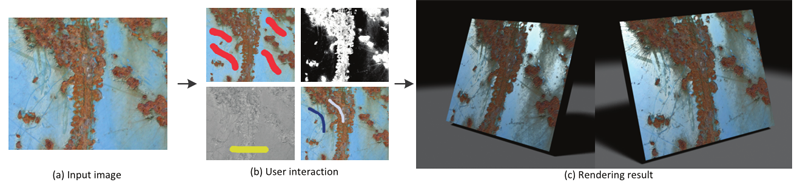

Given a single image (a), our system models the spatially varying reflectance properties and normals with a few strokes specified by the user (b). The resulting material can be rendered under different lighting and viewing conditions (c).

Abstract

We present AppGen, an interactive system for modeling materials from a single image. Given a texture image of a nearly planar surface lit with directional lighting, our system models the detailed spatially-varying reflectance properties (diffuse, specular and roughness) and surface normal variations with minimal user interaction. We ask users to indicate global shading and reflectance information by roughly marking the image with a few user strokes, while our system assigns reflectance properties and normals to each pixel. We first interactively decompose the input image into the product of a diffuse albedo map and a shading map. A two-scale normal reconstruction algorithm is then introduced to recover the normal variations from the shading map and preserve the geometric features at different scales. We finally assign the specular parameters to each pixel guided by user strokes and the diffuse albedo. Our system generates convincing results within minutes of interaction and works well for a variety of material types that exhibit different reflectance and normal variations, including natural surfaces and man-made ones.

Keywordsappearance modeling, user interaction DownloadsBibTex

@article{Dong:2011:AIM,

author = {Dong, Yue and Tong, Xin and

Pellacini, Fabio and Guo, Baining},

title = {AppGen: interactive material modeling

from a single image},

journal = {ACM Trans. Graph.},

issue_date = {December 2011},

volume = {30},

number = {6},

month = dec,

year = {2011},

issn = {0730-0301},

pages = {146:1--146:10},

articleno = {146},

numpages = {10},

url = {http://doi.acm.org/10.1145/2070781.2024180},

doi = {10.1145/2070781.2024180},

acmid = {2024180},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {appearance modeling, user interaction},

}

|

AcknowledgementsThe authors would like to thank Stephen Lin for paper proofreading and Matt Callcut for video dubbing. The authors also thank GrungeTexture, Leo Reynolds, ka2rina from Flickr and Mayang.com for providing texture inputs. The authors are grateful to the anonymous reviewers for their helpful suggestions and comments. Fabio Pellacini was supported by the NSF (CNS-070820, CCF-0746117), Intel and the Sloan Foundation. |