Recovering Shape and Spatially-Varying Surface Reflectance under Unknown Illumination

- Rui Xia1,2

- Yue Dong2

- Pieter Peers3

- Xin Tong2

- 1University of Science and Technology of China

- 2Microsoft Research

- 3 College of William & Mary

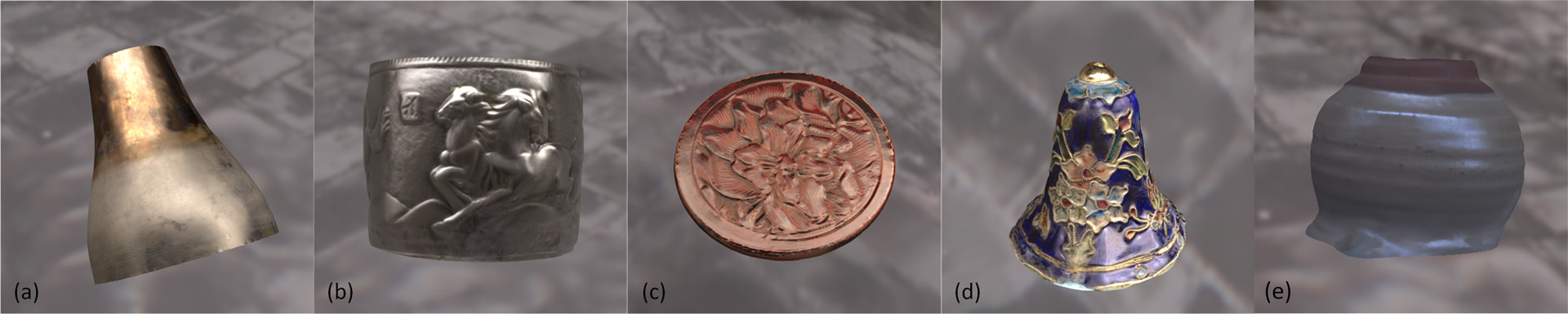

Shape and spatially-varying surface reflectance recovered from a video of a rotating object under unknown illumination.

Abstract

We present a novel integrated approach for estimating both spatially-varying surface reflectance and detailed geometry from a video of a rotating object under unknown static illumination. Key to our method is the decoupling of the recovery of normal and surface reflectance from the estimation of surface geometry. We define an apparent normal field with corresponding reflectance for each point (including those not on the object's surface) that best explain the observations. We observe that the object's surface goes through points where the apparent normal field and corresponding reflectance exhibit a high degree of consistency with the observations. However, estimating the apparent normal field requires knowledge of the unknown incident lighting. We therefore formulate the recovery of shape, surface reflectance, and incident lighting, as an iterative process that alternates between estimating shape and lighting, and simultaneously recovers surface reflectance at each step. To recover the shape, we first form an initial surface that passes through locations with consistent apparent temporal traces, followed by a refinement that maximizes the consistency of the surface normals with the underlying apparent normal field. To recover the lighting, we rely on appearance-from-motion using the recovered geometry from the previous step. We demonstrate our integrated framework on a variety of synthetic and real test cases exhibiting a wide variety of materials and shape.

KeywordsAppearance Modeling, Geometry Reconstruction, Unknown Lighting Downloads |

AcknowledgementsWe wish to thank Guojun Chen for rendering and video composit- ing, and the anonymous reviewers for their constructive feedback. Pieter Peers was partially funded by NSF grants: IIS-1217765, IIS- 1350323, and a gift from Google. |