Learning Texture Generators for 3D Shape Collections

from Internet Photo Sets

- Rui Yu1,2

- Yue Dong2

- Pieter Peers3

- Xin Tong2

- 1University of Science and Technology of China

- 2Microsof Research Asia

- 3 College of William & Mary

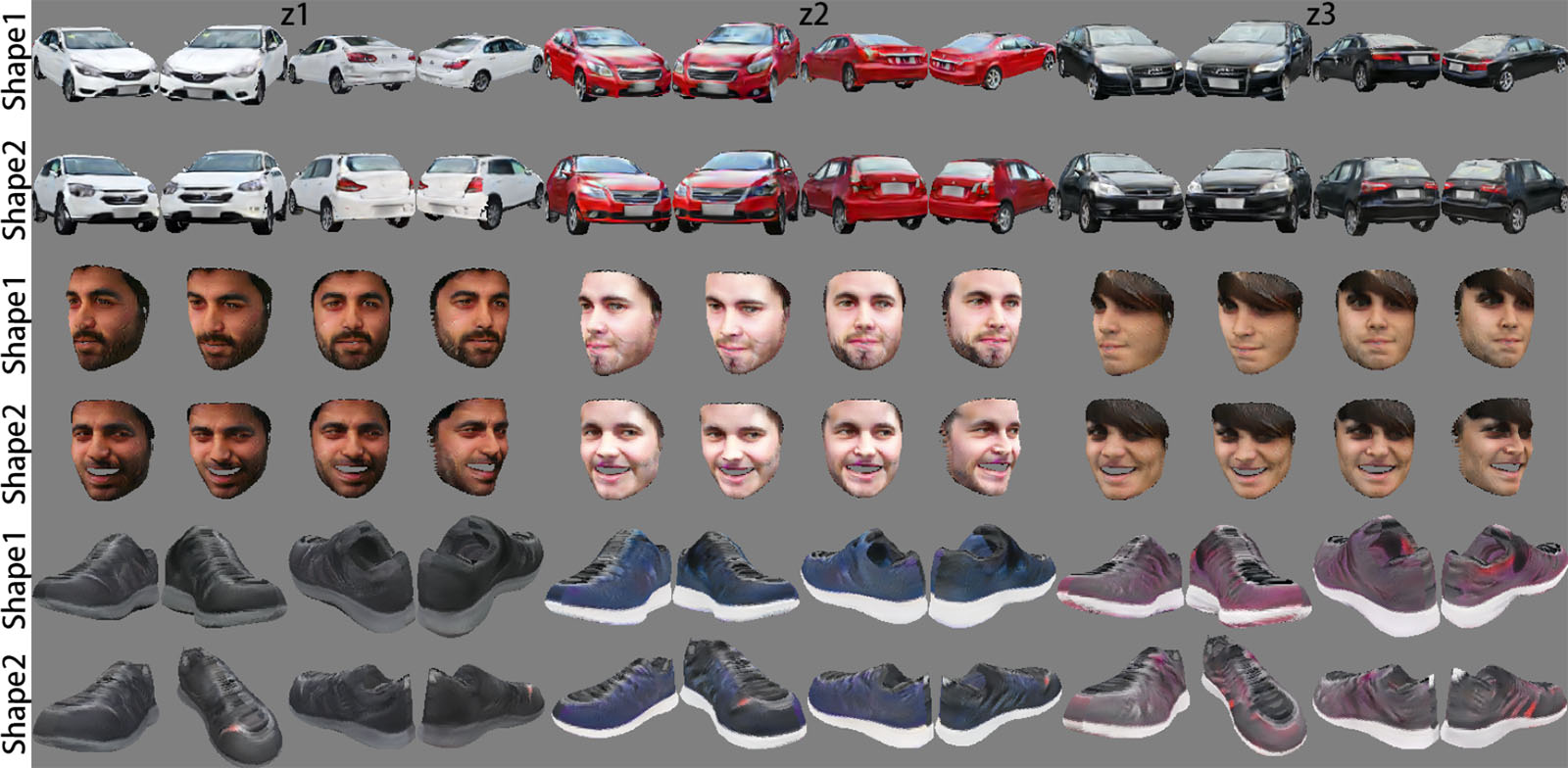

Rendering results of synthesized textures conditioned on the shape. For each example, we vary the shape, shown for 4 different views, over the columns (i.e., fixed z) and the texture over the rows (i.e., different z).

Abstract

We present a method for decorating existing 3D shape collections by learning a tex- ture generator from internet photo collections. We condition the StyleGAN [15] texture generation by injecting multiview silhouettes of a 3D shape with SPADE-IN [23]. To bridge the inherent domain gap between the multiview silhouettes from the shape col- lection and the distribution of silhouettes in the photo collection, we employ a mixture of silhouettes from both collections for training. Furthermore, we do not assume each exemplar in the photo collection is viewed from more than one vantage point, and lever- age multiview discriminators to promote semantic view-consistency over the generated textures. We verify the efficacy of our design on three real-world 3D shape collections.

KeywordsGAN, Texture generation Paper and video |

Trained model and codeAcknowledgementsPieter Peers was supported in part by NSF grant IIS-1909028. |