Object-based Illumination Estimation with Rendering-aware Neural Networks

- 1Microsof Research Asia

- 2Zhejiang Univiersity

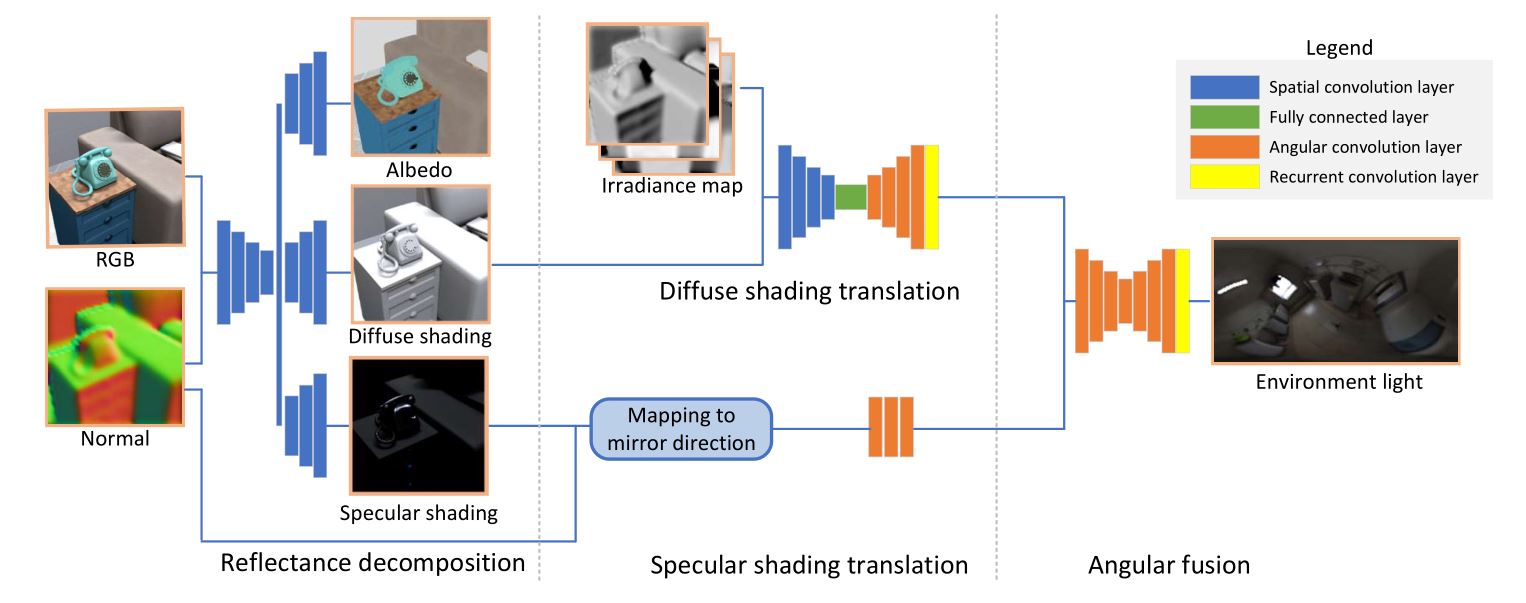

Overview of our system. The input RGB image crop is first decomposed into albedo, diffuse shading and specular shading maps. This decomposition is assisted by shape information from a normal map, calculated from rough depth data. The diffuse shading is translated into the angular lighting domain with the help of auxiliary irradi- ance maps computed from the rough depth, and the specular shading is geometrically mapped to their mirror direction. The translated features are then processed in the angular fusion network to generate the final environment light estimate.

Abstract

We present a scheme for fast environment light estimation from the RGBD appearance of individual objects and their local image areas. Conventional inverse rendering is too computationally demanding for real-time applications, and the performance of purely learning-based techniques may be limited by the meager input data available from in- dividual objects. To address these issues, we propose an approach that takes advantage of physical principles from inverse rendering to con- strain the solution, while also utilizing neural networks to expedite the more computationally expensive portions of its processing, to increase robustness to noisy input data as well as to improve temporal and spa- tial stability. This results in a rendering-aware system that estimates the local illumination distribution at an object with high accuracy and in real time. With the estimated lighting, virtual objects can be rendered in AR scenarios with shading that is consistent to the real scene, leading to improved realism.

KeywordsLighting estimation, Inverse rendering |

Paper and video |