Deferred Neural Lighting: Free-viewpoint Relighting from Unstructured Photographs

- 1Tsinghua University

- 2Microsof Research Asia

- 3 College of William & Mary

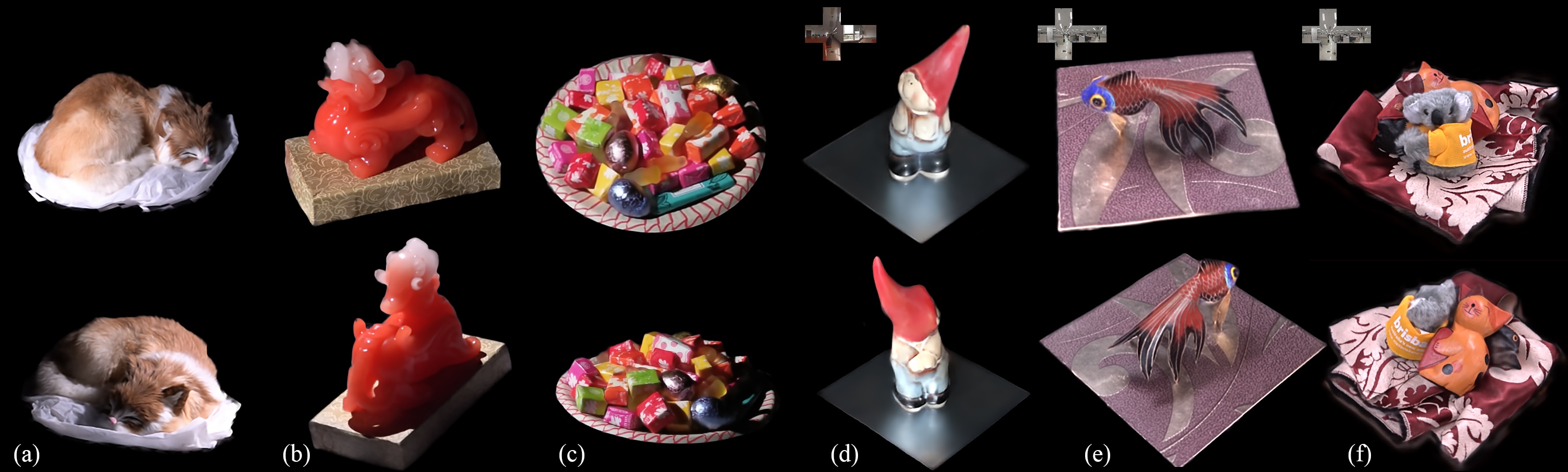

Fig. 1. Examples of scenes captured with a handheld dual camera setup, and relit using deferred neural lighting. (a) A cat with non-polygonal shape (i.e., fur), (b) a translucent Pixiu statuette, (c) and a candy bowl scene with complex shadowing. All three scenes are relit with a directional light. (d) A gnome statue on a glossy surface, (e) a decorative fish on a spatially varying surface, and (f) a cluttered scene with a stuffed Koala toy, a wooden toy cat and anisotropic satin. All three scenes are relit by the natural environment maps shown in the insets.

Abstract

We present deferred neural lighting, a novel method for free-viewpoint re- lighting from unstructured photographs of a scene captured with handheld devices. Our method leverages a scene-dependent neural rendering network for relighting a rough geometric proxy with learnable neural textures. Key to making the rendering network lighting aware are radiance cues: global il- lumination renderings of a rough proxy geometry of the scene for a small set of basis materials and lit by the target lighting. As such, the light transport through the scene is never explicitely modeled,but resolved at rendering time by a neural rendering network. We demonstrate that the neural textures and neural renderer can be trained end-to-end from unstructured photographs captured with a double hand-held camera setup that concurrently captures the scene while being lit by only one of the cameras’ flash lights. In addi- tion, we propose a novel augmentation refinement strategy that exploits the linearity of light transport to extend the relighting capabilities of the neural rendering network to support other lighting types (e.g., environment lighting) beyond the lighting used during acquisition (i.e., flash lighting). We demonstrate our deferred neural lighting solution on a variety of real-world and synthetic scenes exhibiting a wide range of material properties, light transport effects, and geometrical complexity.

KeywordsAppearance modeling, Inverse rendering Paper and videoTrained model and code |

AcknowledgementsWe would like to thank the reviewers for their constructive feedback, Nam et al. [2018] for kindly agreeing to help with the comparison with their method, and Xu et al. [2018] for sharing their trained network (Figure 10). Pieter Peers was partially supported by NSF grant IIS-1909028. Duan Gao and Kun Xu are supported by the National Natural Science Foundation of China (Project Numbers: 61822204, 61932003, 61521002). |